Learning as a Superpower: Deep Understanding with AI

When AI provides instant answers, true technical expertise lies not in knowing answers, but in learning deeply and asking the right questions.

The Paradox of Abundant Knowledge

This month, as I’ve been working with several new teams, the concepts of learning and accelerating understanding have been at the forefront of my mind—particularly how AI fits into everyone’s learning toolkit. As a seasoned technical leader who’s witnessed multiple paradigm shifts over the past 30 years, I’ve noticed a curious paradox emerging: as AI tools make information more accessible than ever, deep understanding becomes increasingly valuable.

Ten years ago, the ability to recall syntax or implement complex algorithms from memory was considered impressive. Today, a well-crafted prompt to your AI coding assistant can generate solutions in seconds, complete with documentation and performance analysis. But with information at our fingertips, the advantage no longer lies in knowing the answers—it’s in understanding how to work with, evaluate, and build upon those answers.

This shift represents the new challenge for technical expertise: in an era of AI-generated solutions, success depends not on knowing all the answers, but on knowing why the answers matter and how to transform them into meaningful progress.

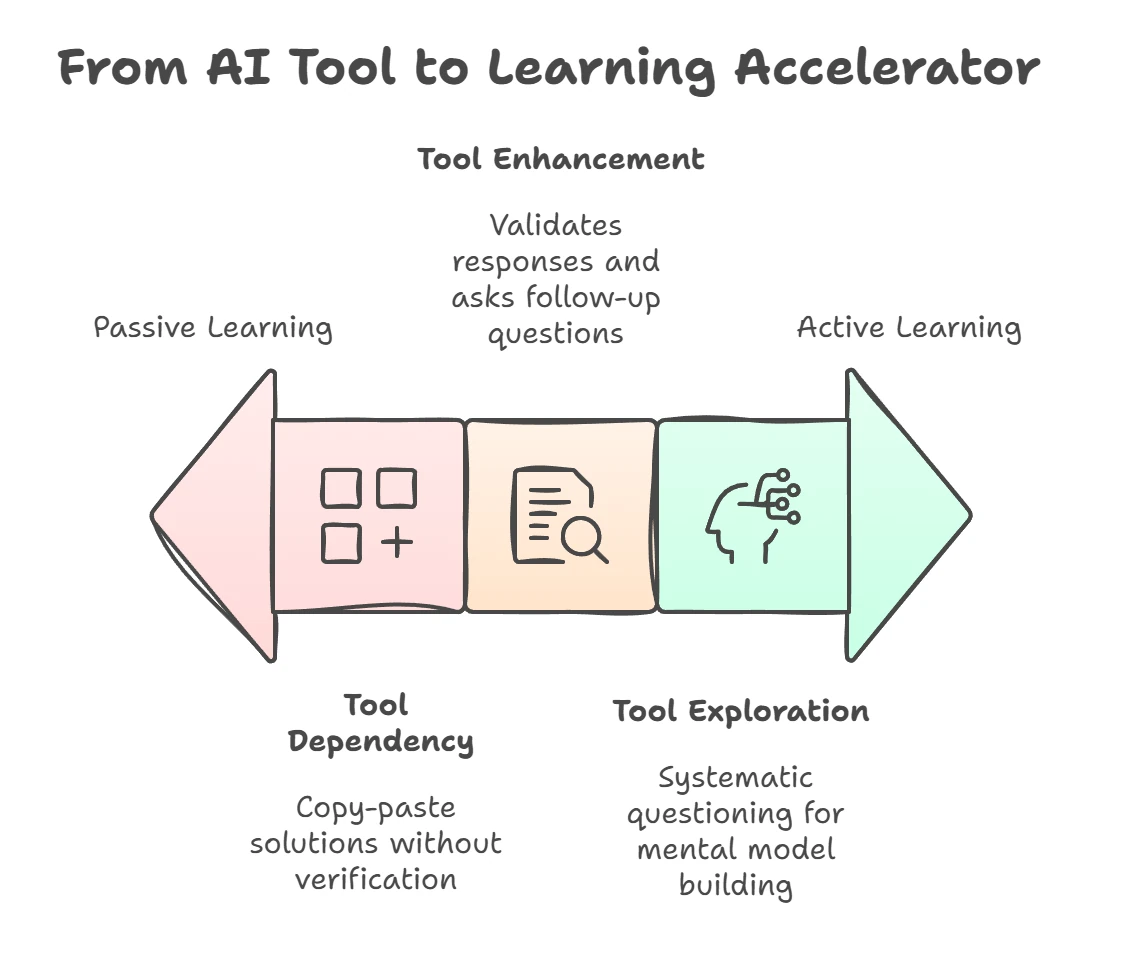

This shift creates an important distinction between two modes of engaging with technical work:

- Answer-seeking: Using tools to obtain ready-made solutions without fully grasping the underlying principles

- Understanding-building: Leveraging tools while simultaneously developing mental models that enable creative problem-solving and innovation

The left makes you a consumer of AI outputs; the right empowers you as an orchestrator of AI capabilities.

Beyond Prompt Engineering: The Mental Models That Matter

The conversations around AI tools often focus on prompt engineering—how to phrase requests to get optimal responses. While important, this perspective misses something far more fundamental: the mental models that allow you to recognize good answers from bad ones, to identify gaps, and to apply solutions appropriately.

According to research from the Journal of Cognitive Engineering and Decision Making, experts across domains rely not just on facts but on sophisticated mental representations that help them categorize problems and identify solution paths. These models develop primarily through deliberate practice and sustained learning experiences—not from simply obtaining answers.

What Makes Mental Models So Powerful?

They provide context for evaluating information Without a robust mental model, how do you know if an AI-provided solution is optimal, merely adequate, or potentially problematic?

They enable creative connections True innovation often comes from connecting ideas across domains—something AI tools struggle with but well-developed mental models excel at.

They help identify what’s missing The most dangerous bugs aren’t in what the code does, but what it doesn’t do. Strong mental models help you spot these gaps.

They build resilience against tool limitations When tools fail or provide misleading information, mental models provide the foundation for independent problem-solving.

As one engineering leader at NVIDIA recently noted: “AI-assisted development doesn’t replace the need for strong engineering fundamentals—it amplifies them. The engineers who benefit most are those who could solve the problem without AI, but use it to solve problems faster.”

The Craft of Learning vs. The Convenience of Knowing

There’s a fundamental difference between learning and simply knowing an answer:

| Knowing | Learning |

|---|---|

| Static | Dynamic |

| Fact-oriented | Process-oriented |

| Answer-focused | Understanding-focused |

| Fragile (easily outdated) | Resilient (adaptable to change) |

| Tool-dependent | Tool-enhanced |

In technical domains, this distinction becomes particularly important. Consider two scenarios:

Scenario A: You need to optimize a database query. You paste it into an AI assistant, which returns an optimized version. It works, and you implement it.

Scenario B: You need to optimize a database query. You research query planning, understand the execution path, learn about indexing strategies, and then use an AI assistant to validate your approach and suggest improvements.

In Scenario A, you’ve solved an immediate problem. In Scenario B, you’ve solved the problem while simultaneously building a mental model that will help you solve countless future problems—and evaluate AI-generated solutions more effectively.

Asking Better Questions: The Hidden Superpower

Perhaps counterintuitively, in an age of instant answers, the ability to ask good questions becomes more valuable than having ready answers. This is particularly true when working with AI tools, where question quality directly impacts answer quality.

According to research on technical expertise by Ericsson and Pool, experts don’t just have more knowledge—they ask fundamentally different questions than novices. These questions tend to be:

- More structured - Framed within coherent mental models

- More precise - Using domain-specific language and concepts

- More contextual - Accounting for situational factors and constraints

- More anticipatory - Predicting potential issues and edge cases

In my own experience mentoring technical teams, I’ve observed that senior engineers often don’t need to ask AI tools for complete solutions—instead, they ask for specific insights that fill gaps in their existing understanding. They’re not outsourcing thinking; they’re augmenting it.

The Evolution of Technical Questions

The maturity of your questions directly impacts the value of AI responses. Consider this progression:

Novice Questions typically focus on immediate solutions:

- “How do I do X?”

- “What is the code for Y?”

- “Fix this error”

These generate complete solutions with little learning value. The AI responds with ready-made answers that solve immediate problems but don’t build understanding.

Intermediate Questions show more process awareness:

- “What approach works best for X?”

- “Why doesn’t my solution to Y work?”

- “What are the tradeoffs between A and B?”

Here, you’re starting to think about approaches and comparisons rather than just seeking solutions. AI responses provide multiple options with some reasoning.

Advanced Questions demonstrate systems thinking:

- “Given constraints X and Y, what patterns might apply here?”

- “How would this solution scale under conditions Z?”

- “What edge cases should I consider in this context?”

These questions show you understand the broader technical landscape. They’re principle-focused with rich context, generating nuanced AI analysis that supports deeper learning.

Expert Questions reveal sophisticated mental models:

- “How might concept X from domain Y apply here?”

- “What assumptions am I making that could be limiting my approach?”

- “What’s a completely different paradigm for solving this?”

These questions are mental model-focused and cross-disciplinary, often generating thought partnership that extends beyond the original query.

The most effective technical professionals I know use AI tools not as oracles but as thought partners—asking questions that would be valuable even without the tool, then using the responses to refine their own thinking.

Learning as a Deliberate Practice in the AI Age

If learning remains our superpower in the AI age, how do we cultivate it effectively? Here are practical strategies I’ve found valuable both in my own development and when coaching technical teams:

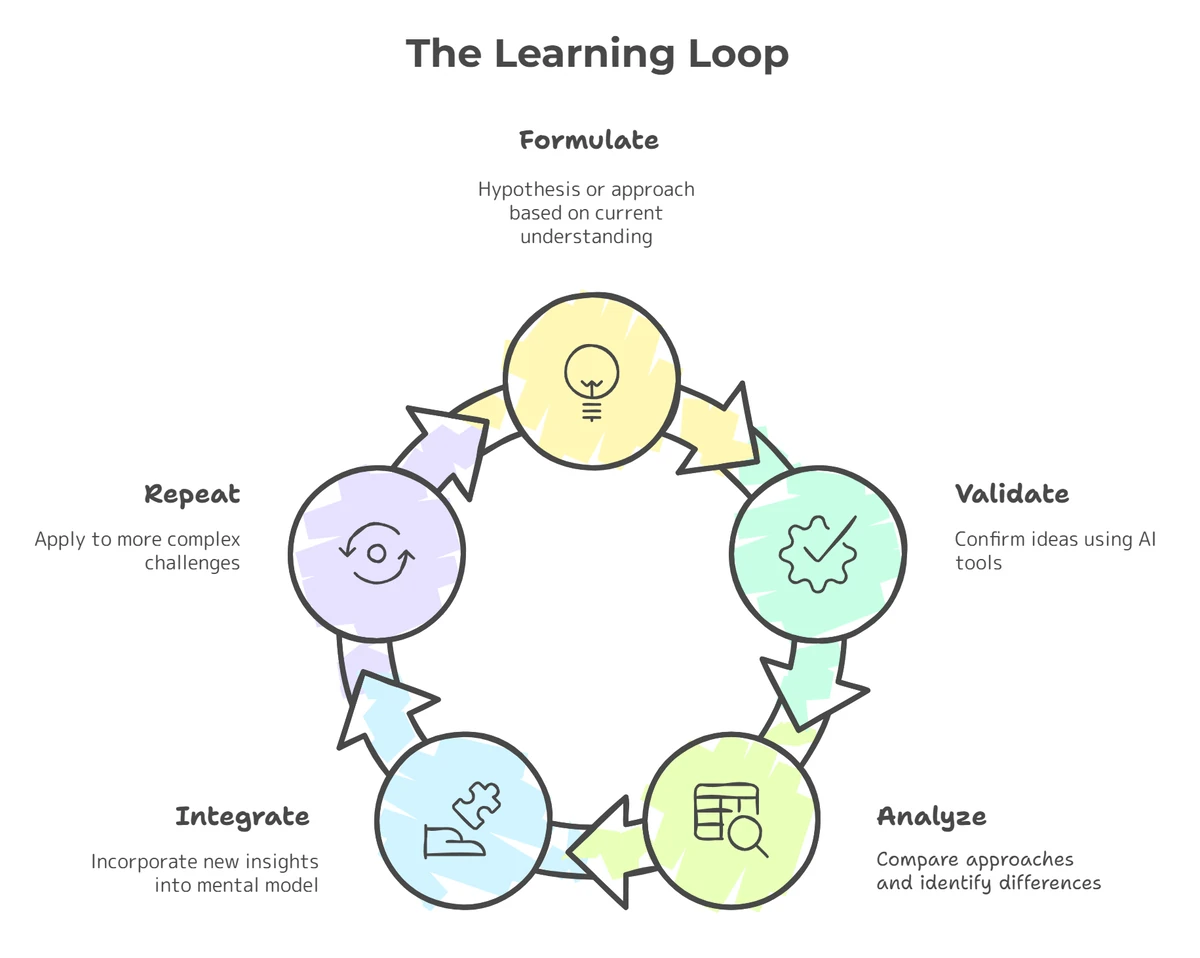

1. Embrace the Learning Loop

Rather than viewing AI tools as answer machines, incorporate them into a learning loop:

- Formulate a hypothesis or approach based on your current understanding

- Validate using AI tools to check your thinking or suggest alternatives

- Analyze the differences between your approach and the AI suggestion

- Integrate new insights into your mental model

- Repeat with increasingly complex challenges

This approach transforms AI from a shortcut into a learning accelerator. Here’s what this looks like in practice with a concrete example.

Poor AI Questioning Approach: “My React component isn’t rendering. Fix it.”

Learning-Focused Approach: “I have a React component that’s crashing. Before you fix it, help me understand: what are the common causes of rendering errors in React? Then, looking at my code, what principle am I violating and why does that cause problems?”

The first approach gets you a quick fix. The second builds your mental model of React’s rendering behavior, error boundaries, and defensive programming—knowledge that applies to hundreds of future situations.

2. Practice Explanation as Validation

One of the most effective ways to verify understanding is to explain concepts in your own words:

- Ask AI tools for explanations, then try to rephrase them without looking

- Generate your own explanations, then use AI to verify your accuracy

- Engage in rubber duck debugging—explaining your code or approach out loud

- Create visual models or diagrams that represent your understanding

While the quote “If you can’t explain something in simple terms, you don’t understand it” is often misattributed to both Einstein and Feynman, the principle behind it remains sound. The ability to explain complex concepts in accessible language demonstrates true understanding.

3. Deliberately Create Knowledge Gaps

Paradoxically, one of the best ways to learn is to deliberately create situations where you don’t immediately have answers:

- Intentionally tackle problems slightly beyond your current capabilities

- Impose constraints that force creative solutions (e.g., “solve this without using library X”)

- Attempt to solve problems before consulting AI tools, then compare approaches

- Work with unfamiliar technologies or domains to expand your mental models

“In times of change, learners inherit the earth, while the learned find themselves beautifully equipped to deal with a world that no longer exists.”

4. Practice Synthetic Thinking

While AI excels at analytical tasks (breaking things down), humans still maintain an edge in synthesis (combining ideas in novel ways):

- Actively seek connections between different domains or technologies

- Ask “How could concept X from domain Y apply to this problem?”

- Explore analogies and metaphors that might yield new insights

- Combine solutions from multiple AI suggestions into novel approaches

This synthetic thinking—combining ideas from different domains—remains challenging for current AI systems and represents a distinctly human competitive advantage.

Framing Questions for Learning: Understanding Your AI Partner

Not all AI models are created equal, and understanding how to frame questions for maximum learning value requires recognizing the capabilities and limitations of different systems. The way you interact with a coding assistant like GitHub Copilot differs significantly from how you’d approach a conversational AI like ChatGPT or Claude.

Model-Specific Question Strategies

For Conversational AI Models (ChatGPT, Claude, Perplexity):

- These excel at explanation, reasoning, and connecting concepts across domains

- Frame questions to leverage their teaching capabilities: “Explain the reasoning behind X and help me understand why Y matters in this context”

- Ask for multiple perspectives: “What are three different approaches to this problem, and what are the tradeoffs of each?”

- Request synthesis: “How does this concept relate to what I might know from domain Y?”

For Code-Focused AI (GitHub Copilot, Codeium):

- These optimize for implementation over explanation

- Follow up implementation suggestions with broader questions about architecture and patterns

- Use them for initial implementation, then ask conversational AI to explain the principles behind the generated code

For Search-Enhanced AI (Perplexity, Bing Chat):

- These provide current information and citations

- Frame questions to capture both facts and learning: “What are the current best practices for X, and why have they evolved from previous approaches?”

- Ask for sources you can explore further: “What are the foundational papers or articles I should read to understand this topic deeply?”

The Art of Follow-Up Questions

The real learning happens in the follow-up. Rather than accepting the first response, use it as a starting point for deeper exploration:

After receiving any AI response, try asking:

- “What assumptions is this solution making?”

- “How would this approach break down under different conditions?”

- “What alternative approaches might work better in situation Y?”

- “What’s the fundamental principle that makes this work?”

Prompt Engineering for Learning vs. Problem-Solving

When your goal is learning rather than just solving an immediate problem, adjust your prompting strategy:

Learning-Focused Prompts:

- “Walk me through your reasoning for this solution step by step”

- “What mental model should I develop to understand problems like this?”

- “Challenge my understanding: what might I be missing about this approach?”

- “What questions should I be asking that I’m not asking?”

Problem-Solving Prompts:

- “Solve this specific problem for me”

- “Generate code that accomplishes X”

- “Fix this bug”

The first set builds your capabilities; the second solves immediate needs. Both have their place, but learning-focused prompts create compound returns on your time investment.

The Craftsperson vs. The Tool User

There’s a fundamental difference between viewing AI as a substitute for learning and viewing it as an enhancement to your learning process. This difference parallels the distinction between a craftsperson and a mere tool user.

The approaches differ across several key dimensions:

| Dimension | Craftsperson Approach | Tool User Approach |

|---|---|---|

| Relationship with AI | Partner in exploration | Source of solutions |

| Learning Approach | Uses AI to accelerate understanding | Uses AI to avoid understanding |

| Question Style | Precise, contextual, targeted | Broad, generic, solution-seeking |

| Response Handling | Evaluates, integrates, builds upon | Accepts, implements with minimal analysis |

| Mental Models | Continuously evolving and refining | Fragmentary and static |

| Long-term Trajectory | Increasing versatility and innovation | Increasing dependence and limitation |

The craftsperson approach creates a virtuous cycle:

- Better mental models lead to…

- More precise questions which generate…

- More valuable AI responses that help build…

- Even better mental models

This cycle accelerates learning rather than replacing it. I’ve seen this pattern consistently across technical teams that effectively integrate AI tools—they don’t become less skilled; they become differently skilled, developing higher-order capabilities while delegating routine tasks.

Looking Forward: Learning as Competitive Advantage

As AI tools continue to evolve, the gap between tool users and craftspeople will likely widen. Those who use AI as a substitute for understanding will find themselves increasingly limited in their ability to innovate, debug complex issues, or adapt to new paradigms. Conversely, those who maintain a learning mindset will leverage these tools to reach new heights of technical capability.

The most successful technical professionals of the coming decade won’t be those who most efficiently prompt AI tools, but those who most effectively learn alongside them—using technology to accelerate understanding rather than replace it.

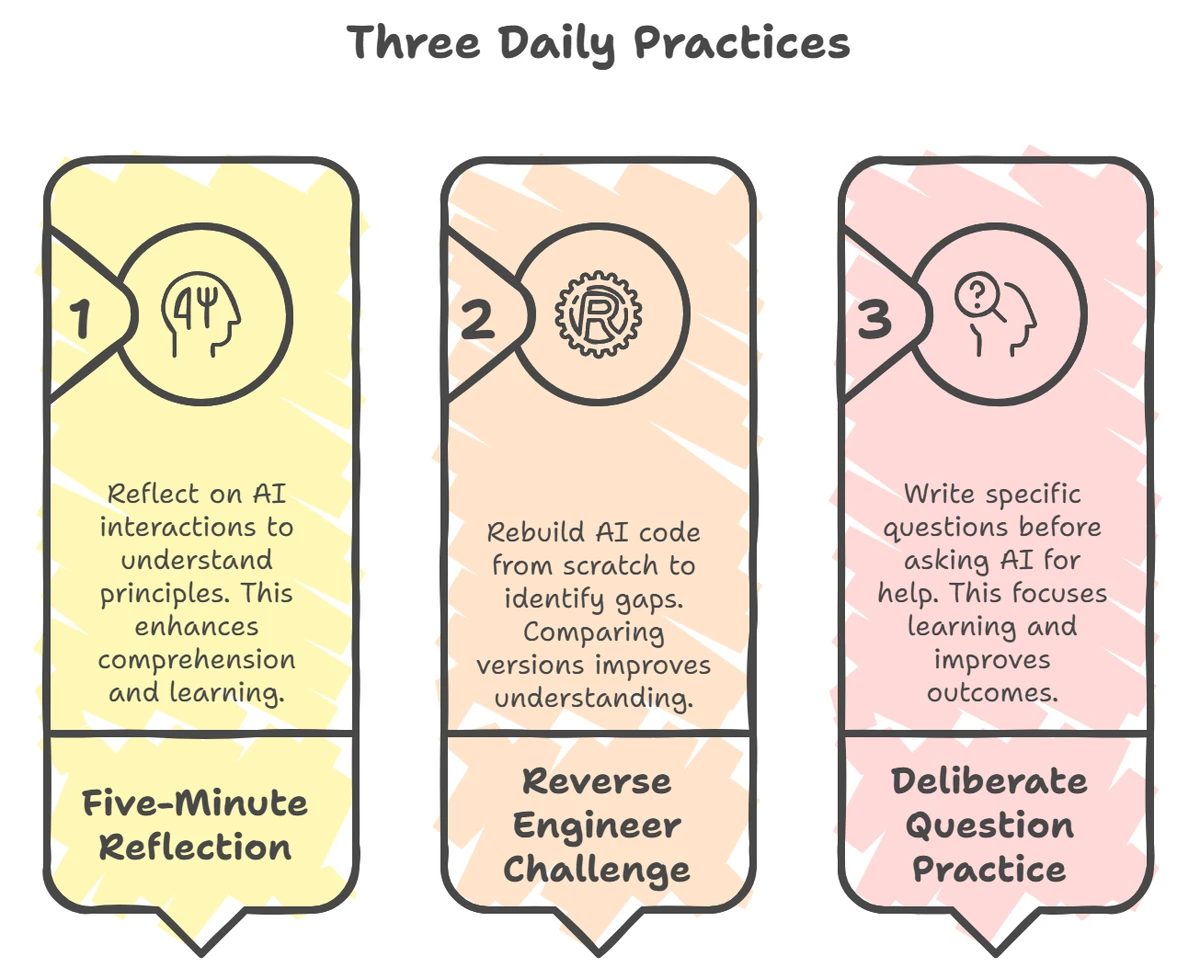

Three Practices to Start Today

If you’re convinced that learning remains your superpower, here are three practices to start implementing immediately:

1. The 5-Minute Reflection

After receiving any AI-generated solution, spend five minutes asking: “Why does this work? What principles underlie this approach? How might I adapt this to different circumstances?”

2. The Reverse Engineer Challenge

Take AI-generated code and try to rebuild it from scratch without looking. Compare your version to the original to identify gaps in your understanding.

3. The Deliberate Question Practice

Before asking AI tools for help, write down three specific, well-formed questions about the problem. This primes your mind to engage actively rather than passively receive information.

“The illiterate of the 21st century will not be those who cannot read and write, but those who cannot learn, unlearn, and relearn.”

In a world increasingly mediated by AI, the ability to learn deeply and continuously isn’t just a nice-to-have skill—it’s the fundamental superpower that will define successful technical careers. The tools will change, the frameworks will evolve, but the craftsperson’s commitment to understanding will remain invaluable.

What learning practices have you found most effective in the age of AI? How do you balance the convenience of instant answers with the necessity of deep understanding?