API-First AI

Your API strategy could determine AI success more than your models

Last time, we talked about AI economics—token budgets, cost explosions, and why most teams optimize for the wrong metrics. You can model costs perfectly, but it won’t matter if your architecture can’t adapt.

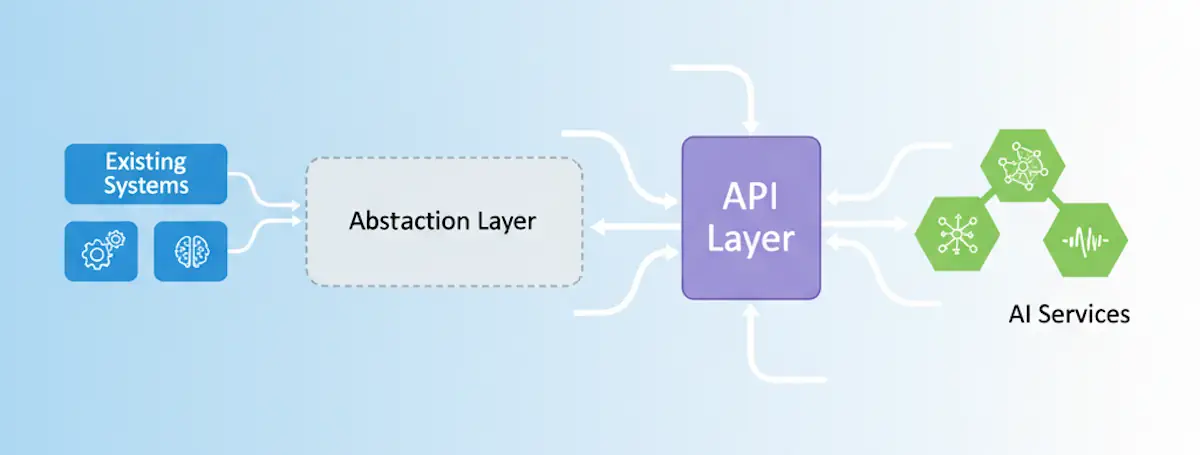

The teams succeeding with AI aren’t the ones with the fanciest models. They’re the ones who treat AI integration like any other enterprise service—with proper abstraction layers, clear contracts, and testable boundaries.

Last year, I came across a great example while doing some consulting for a small aviation company reimagining their rapid prototyped OTG decision engine. They had solid models for risk assessment (a primary core competency), but terrible connections to their on-the-ground information partners for rapid prioritization data. Every time a partner updated their systems, API contracts broke, data gaps appeared in pipelines, and security reviews dragged on for weeks with vendors who just saw them as a ’little shop in the midwest'.

We fixed it by treating their decision engine like any other consumer of partner services. Clean API abstractions. Clear contracts that isolated partner changes. Proper validation layers. Features that used to break monthly became stable. Integration time dropped from weeks to days as they could rapidly turnkey new providers.

Your AI implementation will only be as good as your API architecture.

Three Integration Patterns That Actually Work

Pattern 1: The Service Wrapper

Wrap your AI calls in your own API endpoints. Don’t let AI SDKs touch your application code directly.

When OpenAI releases GPT-6 (or 10, or 200) or you switch to Claude, you change one service layer. Your application code doesn't notice.

In our earlier example, the team wrapped their recommendation engine API this way. They swapped the underlying AI provider three times in the last year. Zero application code changes.

Pattern 2: The Context Gateway

Build a dedicated service that prepares context for AI requests. It pulls only the necessary data, formats it correctly, and manages token budgets.

The gateway controls exactly what context the AI sees, keeping token costs predictable.

This solves the RAG explosion problem from the previous post. The gateway controls exactly what context the AI sees.

Pattern 3: The Response Transformer

AI responses need business logic applied before they’re useful. Create a service layer that transforms AI outputs into domain objects your application understands.

• Validation Rules

• Business Logic

• Error Handling

Ensures AI suggestions comply with business rules before reaching users.

A customer service team I worked with routes all AI responses through business rule validators. The AI might suggest a refund outside policy limits. The transformer catches this before it reaches the agent, applying proper constraints.

The API-First Advantage

Months to swap providers

Complex security reviews

Tight coupling everywhere

Days to swap providers

Security reviewed once

Clear boundaries

The upfront investment in API design pays off every time you need to change AI providers, update models, or add new capabilities.

The Key To Keeping Up

According to Gartner’s July 2024 research, 30% of generative AI projects will be abandoned after proof of concept by end of 2025, with escalating costs being a primary factor. Many fail because they can’t integrate with existing systems cleanly.

The pattern works because it separates concerns properly:

- Your AI models change frequently (new versions, different providers)

- Your business logic changes occasionally (new rules, regulations)

- Your core data schema changes rarely (you hope)

API layers let each component evolve at its own pace.

Starting Simple

You don’t need to architect everything perfectly upfront. Start with one wrapper around your first AI call.

This week: Take your most critical AI feature and add a thin API layer between your application and the AI service. Even a simple wrapper that just passes requests through establishes the pattern.

Next week: Add basic monitoring to that API layer. Track token usage, response times, error rates. You now have visibility you didn’t have before.

Week three: Add context management. Instead of passing raw data to the AI, format it in your API layer. You’ll immediately see where you can optimize token usage.

You don’t need comprehensive architecture documentation. You need one clean example that demonstrates the value to your team.

The Integration Challenge

The hard part isn’t connecting systems technically, it’s maintaining those connections as both systems evolve.

Good API design for AI means:

- Version your AI endpoints like any other API

- Abstract provider-specific details behind consistent interfaces

- Build monitoring and observability into the integration layer

- Design for failure because AI services will have outages

One startup I advised built their entire product directly on OpenAI’s API. No wrapper. When OpenAI had an outage, their product was completely down. When OpenAI deprecated an API version, they had weeks of emergency work and was forced to always be working in arrears.

Another project, same OpenAI outage. They swapped to their backup provider in 20 minutes by changing one configuration value. Their take away wasn’t a rush to get work done, they spent those hours delivering additional customer value and, behind the scenes, kicking tires on how to get it to swap to the backup provider automatically next time.

Both teams had smart engineers. One had better architecture.

We’ve Been Abstracting For Years

Your AI strategy will succeed or fail based on how well it integrates with your existing systems. The most sophisticated ML model in the world is useless if you can’t reliably connect it to your data and workflows.

Before your next AI feature kickoff, ask your team: “What’s our API strategy for this?” If the answer is “we’ll call the AI service directly,” you’re setting up for integration pain down the road.

The best time to establish clean API patterns was before your first AI integration. The second best time is now, before you have ten tightly-coupled AI features that are painful to maintain.

What’s the most tightly-coupled AI integration in your system right now? That’s where you start adding the API layer you should have built first.