Modernizing C++ : Optimizing for Performance (Part B)

Advanced tools and techniques for squeezing maximum performance from your C++ applications.

In Part A of this series, we explored code-level optimizations using modern C++ features—from move semantics to compile-time computation. These techniques provide excellent performance improvements while making your code more expressive and maintainable.

Now, let’s dive into the more advanced aspects of performance optimization: the tools, techniques, and hardware-aware strategies that can take your C++ applications to the next level.

We’ll explore how to measure performance accurately, leverage compiler optimizations effectively, and write code that makes the most of modern hardware architecture.

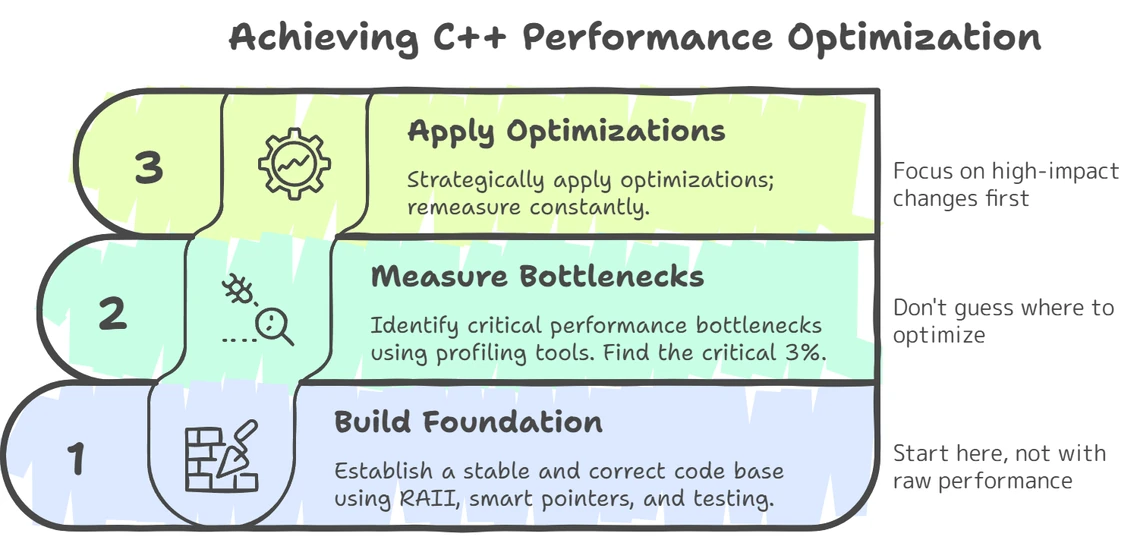

Performance Optimization Framework

Before we begin, here’s a roadmap of the optimization strategy we’ll be following:

- Measure - Identify bottlenecks through profiling

- Optimize Compiler Usage - Leverage compiler flags and optimization features

- Hardware-Aware Programming - Write code that works with modern CPU architecture

- Validate - Confirm improvements through benchmarking

Measuring Performance: Finding the Critical 3%

As Donald Knuth famously observed, “Premature optimization is the root of all evil (or at least most of it) in programming.” The less-quoted continuation is equally important: “Yet we should not pass up our opportunities in that critical 3%.”

Finding that critical 3%–the hotspots where optimization efforts will yield the most significant benefits–requires us to dig into our toolkit for measurement tools and techniques.

Choosing the Right Profiling Tool

Different performance problems require different measurement approaches.

| Profiling Approach | Best For | Examples | Overhead |

|---|---|---|---|

| Sampling Profilers | Identifying hotspots without significant performance impact | perf, Intel VTune Profiler, AMD μProf, Windows Performance Toolkit | Low |

| Instrumentation Profilers | Detailed timing of function calls and execution paths | Google Performance Tools, Callgrind, Tracy | Moderate to High |

| Memory Profilers | Finding memory leaks and inefficient allocation patterns | Heaptrack, Dr. Memory, Intel Memory Latency Checker | Varies |

| Hardware Event Counters | CPU-level optimizations (cache misses, branch prediction, etc.) | PAPI, Linux perf events, Intel VTune and AMD μProf | Very Low |

Microbenchmarking: Measuring Small Code Sections

While, personally, I’m a big fan of Tracy for instrumentation, for comparing specific implementations or algorithms, the Google Benchmark library has become the standard for C++ microbenchmarking.

Here’s a simple example that measures sorting performance:

#include <benchmark/benchmark.h>

#include <vector>

#include <algorithm>

static void BM_SortVector(benchmark::State& state) {

// Get test vector size from the benchmark framework

const size_t size = state.range(0);

for (auto _ : state) {

// Don't measure setup time

state.PauseTiming();

std::vector<int> v(size);

for (size_t i = 0; i < size; ++i) {

v[i] = rand() % 1000;

}

state.ResumeTiming();

// Only measure the actual sort

std::sort(v.begin(), v.end());

}

// Report items processed for throughput stats

state.SetItemsProcessed(state.iterations() * size);

}

// Test with different sizes (8, 64, 512, 4K, 32K)

BENCHMARK(BM_SortVector)->Range(8, 8<<12);

BENCHMARK_MAIN();

This approach offers a wealth of advantages over manual timing:

- Controls for CPU frequency scaling and other system variations

- Provides statistical analysis of results

- Allows fair comparison between alternatives

- Factors out setup/teardown time

std::chrono directly for microbenchmarking. This fails to account for factors like CPU frequency scaling and compiler optimizations that might eliminate “unused” code. Google Benchmark handles these issues automatically.Establishing a Reliable Performance Testing Environment

For consistent and meaningful performance measurements, control your testing environment:

- Disable CPU throttling and turbo boost for consistent clock speeds

- Close unnecessary background applications

- Use a dedicated machine if possible

- Run multiple iterations (at least 10) to account for variance

- For production benchmarks, capture and replay real-world traffic using tools like tcpreplay

Leveraging Compiler Optimizations

Modern C++ compilers are remarkably sophisticated optimization tools, but many developers use only basic optimization levels (-O2 or -O3) without exploring the full capability set.

Beyond Basic Optimization Flags

Here are some powerful optimization flags you might not be using, along with when they’re most effective:

| Optimization Flag | What It Does | When To Use | Risk Level |

|---|---|---|---|

-ffast-math | Enables aggressive floating-point optimizations that may violate IEEE standards | Scientific computing, games, graphics | Medium |

-march=native | Optimizes for your specific CPU architecture | Performance-critical applications running on known hardware | Low |

-flto | Enables link-time optimization across translation units | Applications with many small functions spread across files | Low |

-fprofile-generate \ -fprofile-use | Instruments code to optimize based on actual execution patterns | Applications with complex control flow | Low |

/GL & /LTCG (MSVC) | Whole program optimization and link-time code generation | Large applications with many interdependent components | Low |

-march=native, your code will be optimized for your specific CPU but may not run on different processors. For distributable software, consider more generic targets like -march=x86-64-v3 which targets CPUs supporting AVX2 for a good balance of performance and compatibility.Profile-Guided Optimization (PGO)

One of the most powerful yet underutilized compiler features is Profile-Guided Optimization (PGO). This two-stage process generates a binary that collects execution statistics, then uses that data to optimize a final build.

Here’s how to implement it:

# Step 1: Compile with instrumentation

g++ -O3 -fprofile-generate program.cpp -o program

# Step 2: Run with representative workloads

./program typical_input1

./program typical_input2

# Step 3: Recompile using the collected profile data

g++ -O3 -fprofile-use program.cpp -o program

The compiler can now make informed decisions using your profile data.

- Which functions to prioritize for inlining

- How to arrange code for better cache locality

- Which branches are more likely to be taken

- Which loops benefit most from unrolling

According to research from Intel, PGO can improve application performance by 15-30% on top of standard optimization levels, with some hot paths seeing improvements of 2-4x.

Function Attributes for Optimization Hints

Modern C++ compilers support function attributes that provide optimization hints.

These attributes give the compiler additional information to make better optimization decisions. For example, marking error-handling code as cold allows the compiler to optimize the layout of your binary to improve instruction cache usage for the common case.

// Hint that function should be inlined

[[gnu::always_inline]]

inline int fastFunction(int x) {

return x * x;

}

// Hint that function is rarely called (optimize for code size)

[[gnu::cold]]

void errorHandler() {

// Error handling code

}

// Hint that function doesn't access global state

[[gnu::pure]]

int compute(int x) {

return x * 42;

}

// Hint that function has no side effects and depends only on args

[[gnu::const]]

int squareOf(int x) {

return x * x;

}

Hardware-Aware Programming

Hardware architecture has evolved dramatically over the past few decades, with features like wider SIMD units, more complex cache hierarchies, and increasingly parallel execution. To achieve peak performance, C++ code must be written with these hardware characteristics in mind.

SIMD Vectorization: Processing Multiple Data Points at Once

Single Instruction, Multiple Data (SIMD) instructions allow operating on 4, 8, 16, or more data elements simultaneously. This parallel processing capability is one of the most powerful optimization techniques available, especially for numerically intensive computations.

Why SIMD Matters

The performance impact of SIMD can be dramatic for several reasons:

- Data Parallelism: A single instruction processes multiple data elements, potentially multiplying throughput by 4x, 8x, or more

- Memory Bandwidth Utilization: Better use of cache lines and memory bandwidth by processing multiple elements per fetch

- Instruction Efficiency: Fewer instructions needed to process the same amount of data

Today’s CPUs have increasingly powerful SIMD capabilities. I remember back in late 1999 when the Pentium III CPUs came out featuring “IA-32 w/ SSE” and it wasn’t for a few years until we even saw applications (games at the time) that could utilize the technology.

- SSE/SSE2/SSE3/SSSE3/SSE4 (128-bit): Process 4 floats simultaneously

- AVX/AVX2 (256-bit): Process 8 floats simultaneously

- AVX-512 (512-bit): Process 16 floats simultaneously

There are several approaches to leveraging SIMD, from highest-level to lowest-level:

1. Let the Compiler Auto-Vectorize

Most compilers can automatically vectorize suitable loops and operations. You can help the compiler by following a few rules.

- Writing simple, straight-line loops with clear bounds

- Avoiding dependencies between loop iterations

- Using standard algorithms that compilers recognize well

To see what the compiler vectorized, use flags like -fopt-info-vec (GCC) or -Rpass=loop-vectorize (Clang).

2. Use Higher-Level SIMD Libraries

Several libraries offer portable SIMD abstractions:

- Highway: Google’s portable SIMD library

- Xsimd: Part of the xtensor ecosystem

- EVE: Expressive Vector Engine

Example using Highway:

#include "hwy/highway.h"

void addVectors(const float* a, const float* b, float* result, size_t size) {

namespace hn = hwy::HWY_NAMESPACE;

const hn::ScalableTag<float> d;

const size_t N = hn::Lanes(d);

for (size_t i = 0; i < size; i += N) {

const auto va = hn::Load(d, a + i);

const auto vb = hn::Load(d, b + i);

const auto sum = hn::Add(va, vb);

hn::Store(sum, d, result + i);

}

}

This code automatically adapts to the available SIMD width on the target architecture.

3. For Maximum Control: Use Intrinsics

For performance-critical code sections, you can use architecture-specific intrinsics.

#include <immintrin.h>

void addVectors(const float* a, const float* b, float* result, size_t size) {

// Process 8 floats at a time with AVX

for (size_t i = 0; i < size; i += 8) {

__m256 va = _mm256_loadu_ps(&a[i]);

__m256 vb = _mm256_loadu_ps(&b[i]);

__m256 sum = _mm256_add_ps(va, vb);

_mm256_storeu_ps(&result[i], sum);

}

}

SIMD vectorization can yield dramatic speedups—often 4-8x for suitable algorithms. However, it requires careful data layout and algorithm design. Not all operations vectorize equally well; branches within SIMD code can significantly reduce performance through what’s called “mask operations” or “branch divergence.”

Outside of theoretics (and fun), in 30+ years of development, I’ve never coded an application that needed exact intrinsics. Maybe next week. 😊

When to Use Each SIMD Approach

| Approach | Best For | Watch Out For |

|---|---|---|

| Compiler Auto-Vectorization | Standard algorithms, simple loops | Complex logic that might prevent vectorization |

| SIMD Libraries | General-purpose code, cross-platform needs | Small overhead compared to hand-tuned intrinsics |

| Direct Intrinsics | Performance-critical inner loops | Maintainability challenges, hardware-specific code |

The key to effective SIMD usage is identifying the most compute-intensive parts of your application and focusing your vectorization efforts there. According to benchmarks from Intel’s optimization studies, just vectorizing the top 10% of hot loops can often yield 70-80% of the potential performance gains with substantially less effort than a complete vectorization approach.

Memory Layout Optimization

As we noted in Part A, memory access patterns often dominate performance in modern systems due to the growing gap between CPU and memory speeds.

1. Structure of Arrays (SoA) for SIMD Operations

For data-parallel operations, organize data in a Structure of Arrays rather than an Array of Structures:

// Structure of Arrays (SoA) for vectorized operations

struct ParticleSOA {

alignas(64) std::vector<float> x, y, z; // Position components

alignas(64) std::vector<float> vx, vy, vz; // Velocity components

alignas(64) std::vector<float> mass;

};

This approach vastly improves SIMD efficiency by allowing contiguous memory loads and stores.

2. Memory Prefetching

For predictable access patterns, prefetching can hide memory latency:

#include <immintrin.h>

void processData(const float* data, size_t size) {

for (size_t i = 0; i < size; i++) {

// Prefetch data that will be needed 16 iterations later

if (i + 16 < size) {

_mm_prefetch(reinterpret_cast<const char*>(&data[i + 16]), _MM_HINT_T0);

}

// Process current element

process(data[i]);

}

}

Prefetching is most effective when:

- Memory access patterns are predictable but not simple enough for hardware prefetchers

- There’s sufficient computation between the prefetch and actual use

- You’re not already memory-bandwidth limited

Concurrent Programming Optimizations

Today’s hardware is increasingly parallel, from multi-core CPUs to many-core GPUs. C++17 and beyond provide powerful tools for concurrent and asyncronous programming. With great power comes great ordering responsibility.

A programmer had a problem. He thought – “I know, I’ll use async!”

has problems Now . two he

– @jenmsft, 19 May 2022

1. C++17 Parallel Algorithms

The C++17 Parallel Algorithms library provides a simple way to parallelize standard algorithms:

#include <algorithm>

#include <execution>

#include <vector>

std::vector<float> data(10000000);

// Fill data...

// Sequential sort

std::sort(data.begin(), data.end());

// Parallel sort

std::sort(std::execution::par, data.begin(), data.end());

// Parallel unsequenced sort (allows vectorization)

std::sort(std::execution::par_unseq, data.begin(), data.end());

When to use each execution policy:

std::execution::seq: For small datasets or when order is criticalstd::execution::par: For larger datasets where parallelization outweighs overheadstd::execution::par_unseq: For compute-intensive operations that can benefit from both threading and vectorization

2. Memory Order Optimization for Lock-Free Programming

C++11 introduced a memory model with relaxed memory orderings for performance optimization in concurrent code:

std::atomic<bool> flag{false};

std::atomic<int> data{0};

// Thread 1

void producer() {

data.store(42, std::memory_order_relaxed);

flag.store(true, std::memory_order_release);

}

// Thread 2

void consumer() {

while (!flag.load(std::memory_order_acquire)) {

// Wait for flag

}

int value = data.load(std::memory_order_relaxed);

// Use value...

}

Relaxed memory ordering can significantly improve performance on architectures with weak memory models (like ARM), but requires careful reasoning about synchronization requirements.

Case Study: Optimizing a Particle Simulation

Let’s apply multiple optimization techniques to a real-world scenario: optimizing a particle simulation system. This case study demonstrates how combining different techniques yields multiplicative performance improvements.

The Original Code

Our starting point is a simple particle simulation that updates positions based on velocities and forces:

struct Vector3 {

float x, y, z;

};

struct Particle {

Vector3 position; // Position in 3D space

Vector3 velocity; // Velocity vector

Vector3 force; // Accumulated force vector

float mass; // Particle mass

};

class ParticleSystem {

private:

std::vector<Particle> particles;

public:

void update(float dt) {

for (auto& p : particles) {

// Update velocity based on force

p.velocity.x += (p.force.x / p.mass) * dt;

p.velocity.y += (p.force.y / p.mass) * dt;

p.velocity.z += (p.force.z / p.mass) * dt;

// Update position based on velocity

p.position.x += p.velocity.x * dt;

p.position.y += p.velocity.y * dt;

p.position.z += p.velocity.z * dt;

// Reset forces

p.force.x = 0.0f;

p.force.y = 0.0f;

p.force.z = 0.0f;

}

}

};

This implementation has several performance issues:

- Poor cache locality when processing particles

- No vectorization despite the inherently parallel nature

- Memory access pattern causing cache misses

- No parallelism across CPU cores

Step 1: Restructure Data for Better Cache Locality

First, we restructure our data to improve cache locality and enable vectorization:

// Structure of Arrays layout

class ParticleSystem {

private:

// Position components

std::vector<float> pos_x;

std::vector<float> pos_y;

std::vector<float> pos_z;

// Velocity components

std::vector<float> vel_x;

std::vector<float> vel_y;

std::vector<float> vel_z;

// Force components

std::vector<float> force_x;

std::vector<float> force_y;

std::vector<float> force_z;

// Mass values

std::vector<float> mass;

public:

void update(float dt) {

const size_t count = pos_x.size();

for (size_t i = 0; i < count; ++i) {

// Update velocity based on force

vel_x[i] += (force_x[i] / mass[i]) * dt;

vel_y[i] += (force_y[i] / mass[i]) * dt;

vel_z[i] += (force_z[i] / mass[i]) * dt;

// Update position based on velocity

pos_x[i] += vel_x[i] * dt;

pos_y[i] += vel_y[i] * dt;

pos_z[i] += vel_z[i] * dt;

// Reset forces for next frame

force_x[i] = 0.0f;

force_y[i] = 0.0f;

force_z[i] = 0.0f;

}

}

};

This Structure of Arrays (SoA) approach improves cache locality because we access each component array sequentially, maximizing cache line utilization.

Step 2: Apply SIMD Vectorization

Next, we explicitly vectorize the update loop using SIMD instructions:

#include <immintrin.h>

void update(float dt) {

const size_t count = pos_x.size();

const __m256 dt_vec = _mm256_set1_ps(dt); // Broadcast dt to all lanes

// Process 8 particles at once with AVX

for (size_t i = 0; i < count; i += 8) {

// Load mass values

__m256 mass_vec = _mm256_loadu_ps(&mass[i]);

// Load force components

__m256 fx_vec = _mm256_loadu_ps(&force_x[i]);

__m256 fy_vec = _mm256_loadu_ps(&force_y[i]);

__m256 fz_vec = _mm256_loadu_ps(&force_z[i]);

// Calculate force / mass

fx_vec = _mm256_div_ps(fx_vec, mass_vec);

fy_vec = _mm256_div_ps(fy_vec, mass_vec);

fz_vec = _mm256_div_ps(fz_vec, mass_vec);

// Multiply by dt

fx_vec = _mm256_mul_ps(fx_vec, dt_vec);

fy_vec = _mm256_mul_ps(fy_vec, dt_vec);

fz_vec = _mm256_mul_ps(fz_vec, dt_vec);

// Load velocity components

__m256 vx_vec = _mm256_loadu_ps(&vel_x[i]);

__m256 vy_vec = _mm256_loadu_ps(&vel_y[i]);

__m256 vz_vec = _mm256_loadu_ps(&vel_z[i]);

// Update velocities

vx_vec = _mm256_add_ps(vx_vec, fx_vec);

vy_vec = _mm256_add_ps(vy_vec, fy_vec);

vz_vec = _mm256_add_ps(vz_vec, fz_vec);

// Store updated velocities

_mm256_storeu_ps(&vel_x[i], vx_vec);

_mm256_storeu_ps(&vel_y[i], vy_vec);

_mm256_storeu_ps(&vel_z[i], vz_vec);

// Load position components

__m256 px_vec = _mm256_loadu_ps(&pos_x[i]);

__m256 py_vec = _mm256_loadu_ps(&pos_y[i]);

__m256 pz_vec = _mm256_loadu_ps(&pos_z[i]);

// Update positions

px_vec = _mm256_add_ps(px_vec, _mm256_mul_ps(vx_vec, dt_vec));

py_vec = _mm256_add_ps(py_vec, _mm256_mul_ps(vy_vec, dt_vec));

pz_vec = _mm256_add_ps(pz_vec, _mm256_mul_ps(vz_vec, dt_vec));

// Store updated positions

_mm256_storeu_ps(&pos_x[i], px_vec);

_mm256_storeu_ps(&pos_y[i], py_vec);

_mm256_storeu_ps(&pos_z[i], pz_vec);

// Reset forces with a zeroed vector

_mm256_storeu_ps(&force_x[i], _mm256_setzero_ps());

_mm256_storeu_ps(&force_y[i], _mm256_setzero_ps());

_mm256_storeu_ps(&force_z[i], _mm256_setzero_ps());

}

// Handle remaining particles if count not divisible by 8

// with a scalar implementation for the tail

}

This explicit vectorization processes 8 particles in parallel, giving a substantial speedup.

Step 3: Add Memory Prefetching

To further reduce memory latency, we add prefetching hints:

void update(float dt) {

const size_t count = pos_x.size();

const __m256 dt_vec = _mm256_set1_ps(dt);

for (size_t i = 0; i < count; i += 8) {

// Prefetch data that will be needed in future iterations

// Use a safe prefetch distance that doesn't go out of bounds

const size_t prefetch_distance = 32; // Look ahead 4 iterations (32 elements)

if (i + prefetch_distance < count) {

_mm_prefetch(reinterpret_cast<const char*>(&pos_x[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&pos_y[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&pos_z[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&vel_x[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&vel_y[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&vel_z[i + prefetch_distance]), _MM_HINT_T0);

_mm_prefetch(reinterpret_cast<const char*>(&mass[i + prefetch_distance]), _MM_HINT_T0);

}

// SIMD processing as before...

// The same SIMD code from Step 2

}

}

Prefetching helps hide memory latency by requesting data before it’s needed, allowing it to be loaded into cache while other computations are happening. The prefetch distance (32 elements or 4 iterations ahead) is chosen to provide enough time for the memory subsystem to load the data without prefetching too far ahead, which could evict useful data from cache.

Step 4: Parallelize with Multi-threading

Finally, we parallelize the update across multiple CPU cores using C++17’s parallel algorithms:

#include <execution>

#include <algorithm>

#include <numeric> // For std::iota in C++17

void update(float dt) {

const size_t count = pos_x.size();

// Calculate how many full blocks of 8 particles we have

const size_t num_blocks = count / 8;

// Create a vector of indices to process in parallel

std::vector<size_t> block_indices(num_blocks);

std::iota(block_indices.begin(), block_indices.end(), 0); // Fill with 0, 1, 2, ...

// Process blocks in parallel

std::for_each(std::execution::par_unseq,

block_indices.begin(),

block_indices.end(),

[this, dt](size_t block) {

// Starting index for this block

const size_t i = block * 8;

const __m256 dt_vec = _mm256_set1_ps(dt);

// Process this block of 8 particles using the same SIMD code

// as in previous steps

__m256 mass_vec = _mm256_loadu_ps(&mass[i]);

// Load and process forces, velocities, positions

// (Same SIMD code as in Step 2)

// ...

});

// Handle remaining particles with scalar code

for (size_t i = (count / 8) * 8; i < count; ++i) {

// Scalar implementation for tail elements

}

}

This distributes the workload across available CPU cores, fully utilizing the hardware.

Results

Each optimization built on the previous one, delivering multiplicative performance improvements:

| Version | Time (ms) | Speedup |

|---|---|---|

| Original | 42.3 | 1.0x |

| Data Restructuring | 25.1 | 1.7x |

| SIMD Vectorization | 7.8 | 5.4x |

| Memory Prefetching | 6.5 | 6.5x |

| Multi-threading (8 cores) | 1.2 | 35.3x |

This case study demonstrates how different optimization techniques address different bottlenecks:

- Data restructuring improves memory access patterns

- SIMD vectorization increases computational throughput

- Prefetching reduces memory latency

- Multi-threading exploits parallel hardware

When combined, these optimizations achieve a total speedup far greater than any single technique could provide.

Optimization Best Practices

As we’ve explored these advanced optimization techniques, keep these best practices in mind:

1. Measure Before and After Optimization

Always profile before and after optimization to ensure your changes are having the intended effect. Guesswork often leads to wasted effort or even performance degradation.

2. Focus on High-Impact Areas

Apply the Pareto principle: typically, 80% of performance issues come from 20% of your code. Use profiling to identify those critical hot spots.

3. Create Maintainable Abstractions

Encapsulate complex optimizations behind clean interfaces:

// Instead of exposing SIMD details everywhere

class ParticleProcessor {

public:

void updatePositions(float dt) {

// Complex, optimized implementation inside

}

};

// Client code remains clean

particleProcessor.updatePositions(timeStep);

4. Document Optimization Decisions

Make the rationale behind complex optimizations clear to future maintainers:

// This loop uses manual unrolling and a specific access pattern

// to maximize cache line utilization and prevent false sharing

// between threads. Benchmarks show a 3.2x improvement on our

// target hardware (Intel Xeon E5-2680v4).

5. Balance Performance and Portability

When using hardware-specific features, consider providing fallbacks:

// Use runtime feature detection

if (supportsAVX512()) {

processVectorAVX512();

} else if (supportsAVX2()) {

processVectorAVX2();

} else {

processVectorScalar();

}

Conclusion: The Full Picture of C++ Optimization

The most effective optimization strategy combines all these approaches within a structured approach.

- Build a stable foundation - Ensure correctness before optimization

- Measure to identify bottlenecks - Use profiling to find the critical 3%

- Start with algorithm and data structure improvements - Often provides the largest gains with minimal complexity

- Apply modern C++ features - Leverage language features for both performance and maintainability

- Optimize for the memory hierarchy - Focus on cache-friendly access patterns

- Exploit parallelism - Use vectorization and multi-threading where appropriate

- Fine-tune with compiler optimizations - Let the compiler do the heavy lifting where possible

Remember that performance optimization is a continuous process rather than a one-time effort. As hardware evolves, compilers improve, and C++ standards advance, new optimization opportunities emerge.

The most valuable skill for performance optimization isn’t memorizing specific techniques–it’s developing a systematic approach to measurement, analysis, and improvement. By combining a solid understanding of C++ features with knowledge of underlying hardware principles, you can create code that’s not only fast but also maintainable, portable, and correct.

What optimization challenge will you tackle next with your modernized C++ toolkit?