Performance as Product Management

Let's apply product thinking to how we develop people.

Year-end review season. That special time when many leaders pretend a once-a-year conversation can meaningfully capture twelve months of growth, struggle, and achievement.

As I prep for my own team’s reviews, I’m struck by how traditional performance management is exactly what we’d never tolerate in product development: a waterfall process with annual releases and little to no user feedback loops.

Research shows only 14% of employees believe performance reviews help improve their performance. If a product feature had a 14% success rate, we’d kill it immediately. So why do we persist?

Performance Review Success Rate

Source: NCBI Research on Performance Management

“The annual review’s biggest limitation is its emphasis on holding employees accountable for what they did last year, at the expense of improving performance now and in the future.”

The Product We’d Never Ship

Imagine proposing this product to your team:

- Updates ship once per year

- No iteration based on user feedback

- Success metrics focus on past behavior, not future outcomes

- The primary user experience is anxiety and frustration

- Zero real-time data or continuous improvement

You’d be laughed out of the room. Yet this describes most performance management systems.

If performance reviews worked, we wouldn’t need research telling us they don’t. The evidence is in every awkward year-end conversation where nothing discussed is actually new.

The irony is I remember having this conversation with my own leader almost 20 years ago and (in my overeager youth) creating a PowerPoint explaining how the review process failed both of us and how we could adjust it. He empathized and loved the ideas, but nothing changed–it was institutional.

I wish I could find that old PowerPoint. Shout out at you, Arlyn!

What Product Thinking Teaches Us

In product management, we’ve learned that continuous feedback loops beat big bang releases every time. We prototype, test, learn, and iterate. We focus on user outcomes, not feature outputs. We measure leading indicators, not just lagging ones.

As I’ve written about building psychological safety, the best teams create environments where feedback flows naturally. Performance discussions should never be surprises—they should be summaries of ongoing conversations.

From Evaluation to Enablement

Gartner research shows organizations making performance reviews forward-looking instead of backward-looking see up to 13% improvement in employee performance. The shift is fundamental: stop judging the past, start enabling the future.

Future-Focused Performance Impact

Source: Gartner Research on Performance Management

First, let’s take a look at what the traditional annual review looks like.

- Each year, intentional or not, we’re crossing an ocean of unknown opportunities.

- Do we know why we’re doing it? How we’re getting there? How we’ll know we’ve arrived?

- Do we even know when we should turn back or keep going?

With that in mind, here’s how product thinking transforms performance management:

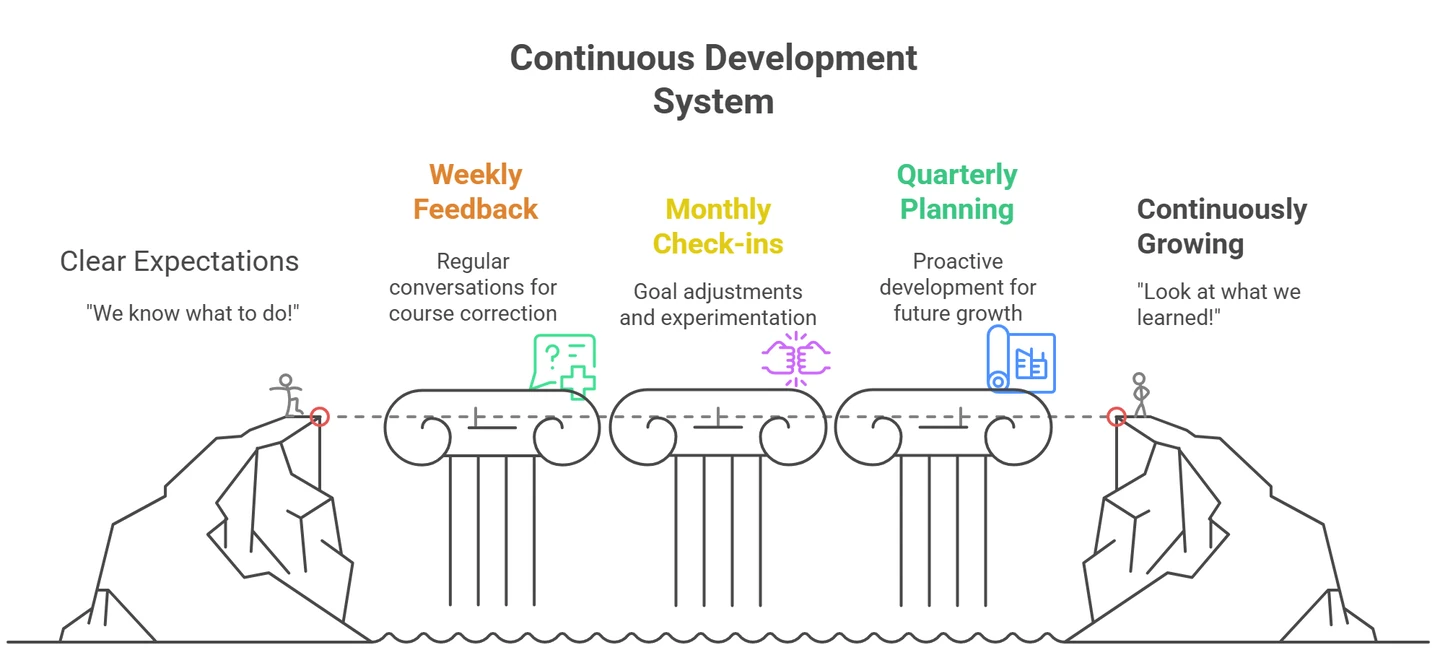

1. Continuous Deployment, Not Annual Releases

Traditional vs Product Approach

Traditional

- Annual review cycle

- Formal documentation

- Backward-looking

- Manager-driven evaluation

Product Approach

- Continuous feedback

- Lightweight documentation

- Forward-looking

- Collaborative development

Just as we deploy code daily, feedback should flow continuously. I use a modified version of my 3-2-1 feedback method in regular 1:1s:

3-2-1 Continuous Feedback Method

This creates 52 mini-reviews per year instead of one massive one

This creates 52 mini-reviews per year instead of one massive one.

The hidden challenge? Many managers fear giving continuous feedback because they’ve never learned how. They worry about demotivating team members or creating conflict. I’ve learned, however, that the clarity is exactly what most of us crave. The discomfort of a difficult conversation is nothing compared to the anxiety of not knowing where you stand. Start small—one piece of specific, actionable feedback per week builds the muscle for both manager and employee.

2. User-Centric Design (The Employee IS the User)

- Traditional approach: Manager evaluates employee performance

- Product approach: Two-way collaboration on development

The best product teams obsess over user needs. In performance management, your employee is the user. The conversation should focus on their goals, their blockers, their growth aspirations. As research from Harvard Business Review notes, the focus is shifting from accountability to learning.

But here’s where the metaphor gets tricky: unlike product users who can simply choose another app, employees have careers, mortgages, and families depending on these conversations. The stakes are profoundly human.

3. Measure Outcomes, Not Activities

Traditional vs Outcome-Based Metrics

Traditional Metrics

- Hours worked

- Meetings attended

- Behavior ratings

- Competency scores

Outcome-Based

- Impact delivered

- Skills developed

- Problems solved

- Knowledge shared

We don’t measure product success by lines of code written. Why measure people success by hours worked or meetings attended? Instead, consider focusing on the person:

- Impact delivered to customers/team

- Skills developed and applied

- Problems solved independently

- Knowledge shared with others

4. Sprint Retrospectives for Humans

- Traditional approach: Annual backward-looking review

- Product approach: Regular retrospectives focused on improvement

Every sprint, engineering teams ask: What worked? What didn’t? What will we try differently?

- Monthly development check-ins (not status updates)

- Quarterly goal adjustments based on learning

- Focus on experiments: “Let’s try X and see if it helps you grow in Y”

I’ve found the most powerful question in these retrospectives is: “What would make you excited to come to work tomorrow?” The answers reveal far more about development needs than any competency matrix.

Now that we’ve laid our foundation, those pillars act as our bridge from start to finish. As a leader, I’m accountable and responsible for building those pillars. Yes, there’s intentional gaps–your team has to take a few leaps, learn, and grow too, but you’re there to enable and empower them along the way.

Building Your Development Backlog

In product management, we maintain a prioritized backlog. Why not for people development? Work with each team member to build their personal development backlog.

Personal Development Backlog Structure

Near-term (This Quarter)

- Specific skills to practice

- Stretch assignments to attempt

- Feedback areas to focus on

Medium-term (This Year)

- Capabilities to build

- Roles to explore

- Relationships to develop

Long-term (Career Vision)

- Where they want to be in 3-5 years

- Skills gaps to address

- Experiences needed

This isn’t a performance improvement plan—it’s a growth roadmap everyone should have.

In addition, consider it your tool for measuring accountability, not just for the employee, but yourself too. Are you properly grooming features? Are you enabling partnerships and advocating? Are you measuring and reacting accordingly? Just like how you maintain a backlog, putting pen to paper holds you, as a leader, accountable too.

Talent is part of the equation, but when you combine talent with accountability and authenticity, it is tough to beat.

–David Ross

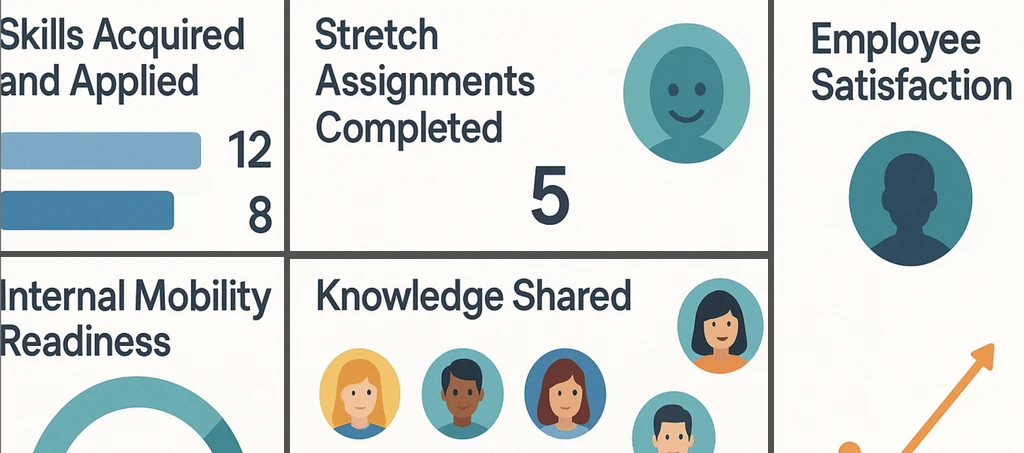

The Data That Actually Matters

Just as we track product metrics, track development metrics—but make them forward-looking:

Development Metrics That Matter

Traditional Metrics

- Performance ratings

- Goal achievement %

- Competency scores

Development Metrics

- Skills acquired & applied

- Internal mobility readiness

- Knowledge shared

- Career velocity

Traditional metrics:

- Performance ratings

- Goal achievement percentages

- Competency scores

Development metrics:

- Skills acquired and applied

- Stretch assignments completed

- Internal mobility readiness

- Knowledge shared (mentoring, documentation, teaching)

- Career velocity (progression toward stated goals)

Common Objections and Reality

“But we need documentation for HR!” You need documentation of growth and development too. Continuous feedback creates better documentation than annual summaries. Every 1:1 note, every goal adjustment, every stretch assignment—that’s your documentation.

“How do we handle compensation without ratings?” The same way you price products—based on market value and impact delivered. Ratings are a poor proxy for either. Track contribution and growth, not arbitrary scales.

“But employees want to know where they stand!” They want to know how to grow and succeed. Traditional ratings tell them neither. Clear growth paths and regular feedback tell them both.

“What about bias and subjectivity?” This is real. Just like product reviews can be skewed by one bad experience, performance discussions can be colored by recent events or personal dynamics. The solution isn’t pretending objectivity exists—it’s acknowledging bias and building systems to counteract it:

- Multiple perspectives (like 360 reviews, but lightweight and continuous)

- Focus on specific behaviors and outcomes, not general impressions

- Regular calibration discussions across managers

- Employee self-assessments as critical input

Making the Shift

Ready to treat performance like a product to continuously improve? Start here:

Kill the surprise factor. If anything in a year-end review is new information, you’ve failed at continuous feedback.

Flip the ratio. Make 80% of performance conversations about the future, 20% about the past.

Create development sprints. Work in quarters, not years. Set learning goals, try experiments, review results.

Measure growth velocity. Track how fast people are developing new capabilities, not how well they maintained existing ones.

Make it bidirectional. Every performance conversation should improve both the employee’s performance and your management approach.

The Year-End Review Reimagined

As I prepare for this year’s reviews, I’m not drafting evaluations. I’m synthesizing a year of continuous conversations into forward-looking development plans. Each review will answer three questions:

- What did we learn about your strengths and growth areas this year?

- Where do you want to be this time next year?

- What experiments will we run in Q1 to accelerate your journey?

The documentation is important, but it’s a milestone marker, not a judgment. It’s a commit message in their development journey, not a final release.

What would happen if you treated your next performance review as a product planning session instead of an evaluation? What if you measured your success as a manager by how fast your people grow rather than how accurately you rate them?