From Agentic Hype to Strategic Reality

Cutting through vendor marketing to build practical frameworks for successful agentic AI implementation.

The Implementation Reality Gap

Every week, another vendor slides into your DMs with promises of “revolutionary agentic AI solutions” that will “transform your entire business.” The marketing drumbeat is deafening: Gartner names agentic AI as the top strategic technology trend for 2025, IBM declares 2025 “the year of the agent”, and McKinsey positions agents as “the next frontier” of AI innovation.

But here’s what the glossy whitepapers don’t tell you: nearly eight in ten companies report using generative AI, yet roughly the same percentage report no material impact on earnings. McKinsey calls this the “gen AI paradox”—widespread adoption with minimal business impact.

Now, as the industry pivots from generative AI to agentic AI, we’re seeing the same patterns emerging. The promise is intoxicating, but IBM research reveals that “most organizations aren’t agent-ready” despite the vendor push. A recent IBM and Morning Consult survey of over 1,000 enterprise developers found that 99% are exploring or developing AI agents, yet their top concern is trustworthiness—highlighting the gap between technical possibility and practical implementation.

Having built both successful and failed AI implementations, I can tell you the gap between agentic theory and implementation reality is vast. This isn’t another explainer of what agentic AI could theoretically do—it’s a practical guide for those of us bridging the product and technical gap and navigating the space between hype and actual value creation.

The Foundational Reality Check

Before diving into implementation frameworks, let’s establish what we’re actually dealing with. In my previous posts, I distinguished between agent-based architecture and agentic behavior, and explored strategic AI integration over flashy features. Those pieces laid the theoretical groundwork—now we need to address the messy realities of implementation.

What the Research Actually Shows

McKinsey’s latest research reveals a critical imbalance: “horizontal” use cases like employee copilots have scaled quickly but deliver diffuse benefits, while higher-impact “vertical” function-specific use cases seldom make it out of pilot phase due to technical, organizational, data, and cultural barriers.

Stanford’s 2025 AI Index found that while AI inference costs dropped 280-fold in 18 months, only 1% of company executives describe their generative AI rollouts as “mature”. The technology is getting cheaper and more capable, but organizational readiness isn’t keeping pace.

This creates what I call the “capability-readiness gap"—the space between what AI agents can technically do and what organizations can actually implement successfully.

The Three Pillars of Agentic Readiness

Based on analysis of successful implementations and my work with enterprise teams, agentic readiness rests on three foundational pillars:

1. Infrastructure Readiness

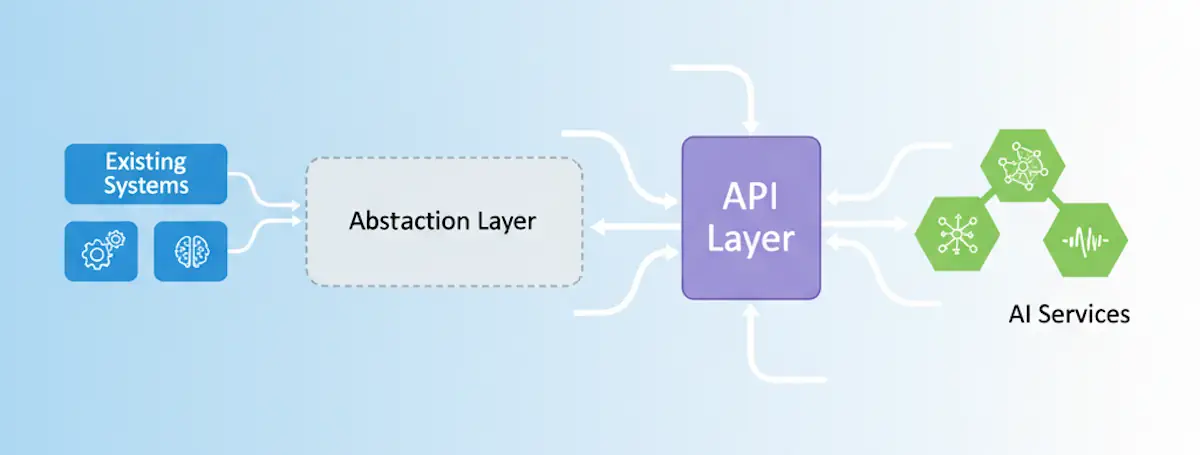

As IBM’s research emphasizes: “What’s going to be interesting is exposing the APIs that you have in your enterprises today. That’s where the exciting work is going to be. And that’s not about how good the models are going to be. That’s going to be about how enterprise-ready you are”.

The Infrastructure Assessment Framework:

- API Maturity: Can your systems expose clean, documented APIs for agent interaction?

- Data Accessibility: Is your data structured and accessible without manual intervention?

- Security Boundaries: Do you have granular permission systems for automated access?

- Monitoring Capabilities: Can you track agent behavior across your entire tech stack?

Most organizations discover their infrastructure isn’t agent-ready when they attempt their first serious implementation. The typical enterprise has grown organically over decades, creating a patchwork of systems that humans navigate through institutional knowledge and workarounds—exactly what agents can’t handle.

2. Governance and Risk Management

UC Berkeley’s analysis warns that “the rapid adoption of agentic AI without adequate vetting will lead to unforeseen consequences”, while IBM research emphasizes the need for “strong compliance frameworks to keep things running smoothly without sacrificing accountability”.

The Governance Framework:

- Decision Boundaries: What decisions can agents make autonomously vs. requiring human approval?

- Error Recovery: How do you detect and correct agent mistakes before they cascade?

- Audit Trails: Can you trace every agent decision back to its reasoning and data sources?

- Human Override: How quickly can humans intervene when agents go off-track?

3. Organizational Change Management

McKinsey’s research on agentic workforce management found that “some of the newer agents or the newer reps tend to embrace AI faster,” while “some of the more tenured employees resist AI quite a bit. It’s really challenging for them”.

This isn’t a technology problem—it’s a human one. Stanford research on human-AI collaboration emphasizes that “we will experience an emerging paradigm of research around how humans work together with AI agents”, but most organizations are unprepared for this transition.

The Strategic Implementation Matrix

Rather than the typical “start small and scale” advice, successful agentic implementations require strategic planning across two critical dimensions: Impact Potential and Implementation Complexity. Think of this as your strategic map for navigating the capability-readiness gap.

The Quick Wins Quadrant: Building Momentum

Customer service augmentation represents the sweet spot for most organizations. Gartner predicts that by 2029, agentic AI will autonomously resolve 80% of common customer service issues without human intervention. What makes this compelling isn’t just the ROI potential—it’s the contained scope and existing human oversight that makes failure manageable.

I’ve seen organizations achieve 15-20% improvements in customer satisfaction within six months by deploying agents that handle routine inquiries while escalating complex issues to humans. The key isn’t replacing customer service representatives but augmenting their capabilities with agents that handle the repetitive tasks that burn them out.

Sales lead qualification follows a similar pattern. McKinsey research shows that AI lead development can synthesize relevant product information and customer profiles to create discussion scripts and automate follow-ups. More importantly, this use case doesn’t require deep system integration—agents can work with existing CRM data to qualify leads before human sales representatives engage.

The Strategic Investment Quadrant: Transformative But Complex

Supply chain optimization sits at the opposite end of the spectrum. Stanford’s AI Index found that 61% of organizations using generative AI in supply chain management report cost savings, but the implementation complexity is substantial. Success requires extensive system integration, real-time data feeds, and sophisticated error recovery mechanisms.

One manufacturing client spent 18 months implementing agentic supply chain management, but the result was a 23% reduction in inventory costs and 40% improvement in demand forecasting accuracy. The complexity was justified by the transformative business impact.

The Learning Quadrant: Building Organizational Capabilities

Internal documentation agents and meeting summarization represent low-risk opportunities to build organizational familiarity with agentic interactions. While the immediate business impact may be modest, these implementations create the cultural foundation for more ambitious projects.

The key insight: successful agentic adoption requires organizational learning curves that can’t be rushed. Teams need to develop intuition about how to work with agents, when to trust their outputs, and how to provide effective feedback.

The Avoid-For-Now Quadrant: Resource Allocation Traps

General-purpose enterprise assistants—the “do everything” agents that vendors love to demo—typically fall into the high-complexity, low-impact category. While impressive in demonstrations, they require significant system integration for unclear value propositions.

Instead of trying to automate everything, focus on specific, measurable use cases where agentic capabilities provide clear advantages over existing solutions.

The Implementation Playbook: A Journey, Not a Checklist

Rather than prescriptive timelines, think of agentic implementation as three overlapping phases that respond to your organization’s unique readiness and learning pace.

Foundation Phase: Building From Reality

The foundation phase isn’t about technology—it’s about understanding your organization’s actual (not aspirational) readiness for autonomous systems. I’ve watched too many implementations fail because teams assumed their infrastructure was agent-ready without actually testing those assumptions.

Start with an infrastructure reality check that goes beyond API documentation. Can your systems handle programmatic access patterns that differ from human usage? Do you have the monitoring capabilities to detect when an agent starts behaving unexpectedly? Most importantly, do you have the organizational processes to respond quickly when something goes wrong?

Simultaneously, assess your team’s change readiness. IBM’s research highlights that developer concerns about agentic systems center on trustworthiness—not technical capability. Your teams need psychological safety to experiment with agents, provide honest feedback about their performance, and develop intuition about human-agent collaboration.

One practical approach: run “failure simulations” where you deliberately break agent behavior to test your organization’s response capabilities. Teams that can quickly detect, diagnose, and recover from agent failures are ready for more ambitious implementations.

Pilot Phase: Learning Through Controlled Implementation

The pilot phase focuses on building organizational competence through contained implementations that deliver immediate value while generating learning. The goal isn’t just to prove that agents work—it’s to understand how your organization works with agents.

Choose pilot implementations based on three criteria: clear success metrics, manageable failure modes, and natural learning opportunities. Customer service augmentation often works well because success is measurable (response time, resolution rate, customer satisfaction), failure modes are containable (human oversight exists), and the learning generalizes to other human-agent collaboration scenarios.

Document everything during pilots—not just what works, but what doesn’t work and why. Pay particular attention to the boundary conditions where agents perform poorly, the handoff points between agents and humans, and the types of problems that emerge only at scale.

Stanford research on collaborative AI systems emphasizes the importance of creating “shared working spaces” where agents and humans can collaborate iteratively rather than operating in isolation. Use your pilot phase to experiment with different collaboration patterns and identify what works for your organizational culture.

Scale Phase: Systematic Expansion

The scale phase transitions from project-based implementations to systematic organizational capabilities for agentic deployment. This isn’t just about implementing more agents—it’s about building the internal expertise, processes, and infrastructure to continuously identify, implement, and optimize agentic solutions.

Successful scaling requires developing internal centers of excellence that can evaluate new use cases, design implementation approaches, and provide ongoing support for deployed agents. These teams become your internal consultants, helping business units identify opportunities and navigate the complexity of agentic implementation.

McKinsey’s analysis suggests that organizations succeeding with agentic AI are those that establish “clearly defined road maps” and “track well-defined KPIs for AI solutions.” But beyond implementation success, leading organizations are building systematic advantages: data network effects, process optimization, organizational learning, and cultural adaptation.

Measuring Success Beyond the Metrics

Traditional AI metrics like accuracy and response time miss the organizational impact of agentic implementations. Focus on these strategic indicators:

Business Impact Metrics

- Decision Velocity: How much faster can your organization make key decisions?

- Resource Allocation Efficiency: Are agents freeing humans for higher-value work?

- Error Reduction: Are agents preventing mistakes in critical processes?

- Customer Experience Improvement: Are agentic interactions improving satisfaction scores?

Organizational Maturity Metrics

- Adoption Rate: What percentage of eligible use cases are successfully using agents?

- Self-Service Rate: How often can teams implement new agent capabilities without external help?

- Governance Compliance: Are all implementations following established frameworks?

- Change Readiness: How quickly can the organization adapt to new agentic capabilities?

Common Implementation Failure Patterns

After observing dozens of agentic AI implementations, certain failure patterns emerge consistently. Understanding these patterns helps product leaders avoid predictable pitfalls and design more robust implementation strategies.

The “Big Bang” Fallacy

Organizations attempt comprehensive agent deployments across multiple functions simultaneously, overwhelming their change management capacity and making problem isolation impossible. I’ve seen leadership teams get excited by vendor demos and try to implement agents in customer service, sales, and operations simultaneously. The result is typically chaos—teams can’t distinguish between technical issues, process problems, and user adoption challenges.

IBM research indicates the challenge isn’t model capability but “how enterprise-ready you are.” Sequential, measurable implementations with clear learning phases allow organizations to build competence systematically rather than hoping everything works simultaneously.

The Infrastructure Surprise

Teams discover critical system limitations only after beginning implementation. Despite extensive planning, organizations often uncover API limitations, data quality issues, or security constraints that weren’t apparent during evaluation phases. One financial services client spent three months building an agentic customer service system before discovering their core banking platform couldn’t handle the API call patterns agents generated.

The solution involves thorough infrastructure stress-testing before implementation begins. Run simulated agent workloads against your systems to identify bottlenecks, test your monitoring capabilities with unexpected usage patterns, and validate that your security frameworks can handle programmatic access at scale.

The Governance Afterthought

Organizations focus on technical implementation and address governance later. UC Berkeley research warns that governance frameworks must be established proactively, not reactively. Teams that try to retrofit governance onto working systems often discover that their agents have already developed problematic behaviors that are difficult to correct.

Build governance frameworks in parallel with technical development, not after deployment. This includes decision boundaries, error recovery procedures, audit trails, and human oversight mechanisms.

The Change Management Gap

Teams assume technical deployment equals organizational adoption. McKinsey’s research shows significant resistance among experienced employees who view agents as threatening rather than helpful. Technical success doesn’t automatically translate to organizational value if people don’t actually use the systems effectively.

Invest equally in change management and technical implementation. This means training, communication, process redesign, and often organizational restructuring to support human-agent collaboration.

The Vendor Evaluation Framework

When assessing agentic AI vendors, look beyond technical demos to organizational fit:

Technical Capabilities (30% of decision weight)

- Agent reasoning and planning abilities

- Integration capabilities with your tech stack

- Performance and reliability metrics

- Security and compliance features

Implementation Support (40% of decision weight)

- Change management expertise and resources

- Organizational assessment capabilities

- Governance framework development

- Training and support programs

Strategic Alignment (30% of decision weight)

- Understanding of your industry and use cases

- Ability to scale with organizational maturity

- Transparency about limitations and risks

- Long-term product roadmap alignment

Building Sustainable Competitive Advantage

The organizations that will lead in the agentic era aren’t necessarily those with the most advanced AI—they’re those that build systematic capabilities for implementing, managing, and evolving agentic systems.

Develop Agentic Organizational Capabilities

- Learning Systems: How quickly can you identify successful patterns and replicate them?

- Adaptation Mechanisms: How rapidly can you adjust agent behavior as business needs evolve?

- Innovation Frameworks: How do you systematically identify new opportunities for agentic implementation?

- Risk Management: How do you balance the speed of automation with the safety of oversight?

Create Competitive Moats

McKinsey research indicates that companies succeeding with agentic AI are those that “establish clearly defined road maps” and “track well-defined KPIs for AI solutions”. But beyond implementation success, leading organizations are building sustainable advantages:

- Data Network Effects: Agents that improve with more interaction data

- Process Optimization: Workflows specifically designed for human-agent collaboration

- Organizational Learning: Institutional knowledge about effective agentic implementation

- Cultural Adaptation: Teams that naturally integrate agents into decision-making

The Path Forward: Three Next Steps

For product leaders ready to move beyond the hype:

1. Conduct a Readiness Assessment

Before evaluating any vendors or technologies, honestly assess your organization’s readiness across infrastructure, governance, and change management dimensions. This assessment should take 2-4 weeks and involve representatives from IT, operations, legal, and the business functions where you’re considering implementation.

2. Design Your Implementation Matrix

Map potential use cases across the impact-complexity matrix I outlined above. Identify 2-3 Quick Win opportunities that can deliver immediate value while building organizational capabilities. These should be implementations you can complete in 3-6 months with clear success metrics.

3. Build Governance Before You Build Agents

Establish clear frameworks for decision boundaries, error recovery, audit trails, and human oversight before implementing any agentic capabilities. As UC Berkeley research emphasizes, “collaboration among governments, industry, researchers, and civil society is essential” for developing effective governance frameworks—start this work internally before external pressures demand faster implementation.

Conclusion: Beyond the Hype Cycle

The agentic AI market is following a predictable pattern: inflated expectations followed by inevitable disappointment, then gradual progress toward genuine value. Product leaders who understand this cycle—and focus on building systematic implementation capabilities rather than chasing the latest demos—will create sustainable competitive advantages.

McKinsey’s analysis concludes that “agentic AI is not an incremental step—it is the foundation of the next-generation operating model”. They’re right, but with a crucial caveat: this transformation requires organizational evolution, not just technology adoption.

The question isn’t whether agentic AI will reshape how we work—it’s whether your organization will be ready to harness that transformation effectively. Start building that readiness now, one systematic implementation at a time.

What’s your organization’s first step toward agentic readiness? The foundations you build today will determine whether you lead the transformation or struggle to catch up.